Welcome to the enigmatic world of quantum computing, where hidden within the fabric of reality lies a revolutionary technology. In this article, we will unravel the mysteries and unveil the basic principles and components of this extraordinary field. Prepare to embark on a journey that explores the potential applications and hurdles encountered in the realm of quantum computing.

What is Quantum Computing?

Quantum computing is a clandestine endeavor rooted in the enigmatic realm of quantum physics. In the conventional world of computing, we rely on bits, which represent information as either 0s or 1s. However, in the quantum realm, we embrace the power of qubits. These ethereal entities can simultaneously embody both 0 and 1, a phenomenon known as superposition. This quantum duality grants quantum computers unparalleled capabilities.

Limitations of Classical Computing:

Before we delve deeper into the enigma of quantum computing, let us first confront the limitations of classical computing. Over time, classical computers have grown more potent, but they face insurmountable barriers dictated by the laws of physics at the subatomic level. It is here that quantum computing offers a tantalizing alternative, promising to transcend these boundaries.

The Birth of Quantum Computing:

The origins of quantum computing can be traced back to quantum theory, a subversive branch of physics that emerged in the early 20th century. Yet, it was not until later that visionaries began to intertwine quantum theory with computer science. They realized that harnessing the peculiar properties of quantum mechanics could unlock unprecedented computational efficiency. Thus, the concept of a quantum computer was born.

Understanding Quantum Mechanics:

To grasp the foundation of quantum computing, we must explore the intricate tapestry of quantum mechanics. Classical mechanics laid the groundwork for understanding the workings of the macroscopic world, but it fell short when attempting to explain the behavior of particles and light at the atomic and subatomic levels. Scientists like Max Planck and Albert Einstein introduced the notion of “quanta” to describe energy in discrete quantities. Subsequently, it was revealed that all matter, including electrons and protons, possesses wave-like characteristics.

The Marvels of Quantum Computing:

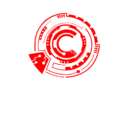

Now that the veil of secrecy is lifted, let us delve into the essence of quantum computing—the core components that empower its extraordinary capabilities. Qubits, the building blocks of this clandestine technology, defy the conventional bits of classical computing. Unlike their binary counterparts, qubits bask in superposition, embodying both 0 and 1 simultaneously. This quantum dance allows quantum computers to undertake multiple calculations in unison, bestowing them with exponential processing power.

Harnessing Quantum Operations:

Quantum operations, symbolized by quantum gates, serve as the catalysts for manipulating and processing qubits. These gates, akin to the building blocks of quantum circuits, are reminiscent of the logic gates within classical computing. By artfully combining and implementing diverse quantum gates, we unlock the potential for intricate computations within quantum computers.

Quantum Algorithms:

Quantum algorithms, meticulously crafted step-by-step procedures, lie at the heart of quantum computing. These algorithms exploit the unique properties of qubits, such as superposition and entanglement, to outshine their classical counterparts. Remarkable algorithms like Shor’s algorithm for prime factorization and Grover’s algorithm for database searches exemplify the sheer power of quantum computation.

The Road Ahead:

While quantum computing tantalizes with its boundless potential, it faces formidable obstacles. One prominent challenge is preserving the fragile state of qubits, which are susceptible to decoherence and errors caused by their environment. Tenacious scientists actively pursue quantum error correction techniques to mitigate these issues and achieve reliable quantum computation.

Applications of Quantum Computing:

The realm of quantum computing holds the promise of transforming various fields. In the domain of machine learning, it could ignite unprecedented computational speed and elevate data storage to unimaginable heights. Quantum computing also has implications for cryptography, challenging established encryption methods and ushering in new paradigms. Nevertheless, it is crucial to acknowledge that classical and quantum computing will coexist, each complementing the other in their distinct strengths and applications.

Conclusion:

Quantum computing, a clandestine fusion of quantum physics and computer science, beckons the curious and the bold. While it may seem shrouded in complexity, its fundamental concepts are within reach for anyone willing to embark on this extraordinary journey. As researchers continue to push the boundaries of quantum hardware and algorithms, we draw closer to unveiling the full potential of this cryptic and transformative technology.

Sources:

Nielsen, M. A., & Chuang, I. L. (2010). Quantum computation and quantum information. Cambridge: Cambridge University Press.

Preskill, J. (2018). Quantum computing in the NISQ era and beyond. Quantum, 2, 79.

Mermin, N. D. (2007). Quantum computer science: An introduction. Cambridge: Cambridge University Press.

Yanofsky, N. S., & Mannucci, M. A. (2008). Quantum computing for computer scientists. Cambridge: Cambridge University Press.

Ladd, T. D., Jelezko, F., Laflamme, R., Nakamura, Y., Monroe, C., & O’Brien, J. L. (2010). Quantum computers. Nature, 464(7285), 45-53.

Devitt, S. J., Munro, W. J., & Nemoto, K. (2013). Quantum error correction for beginners. Reports on Progress in Physics, 76(7), 076001.

Shor, P. W. (1997). Polynomial-time algorithms for prime factorization and discrete logarithms on a quantum computer. SIAM Journal on Computing, 26(5), 1484-1509.

Grover, L. K. (1996). A fast quantum mechanical algorithm for database search. In Proceedings of the twenty-eighth annual ACM symposium on Theory of computing (pp. 212-219).